The Amazon EKS Access Trap: How Not to Lose Control Over Your Own Clusters

While some will have already worked with the Amazon Elastic Kubernetes Service (EKS) — and are therefore perfectly familiar with this problem — we hope this serves as an important reminder of the true risk of losing control over your infrastructure.

To those of you who know very little about Kubernetes clusters and their role-based access control (RBAC), however, consider it a blessed revelation!

Taking Over a Project From an Existing Supplier

Recently, Software Planet Group were asked to lend a hand in a software project, a term used very deliberately here as we initially had nothing at all to do with it. In fact, what our software developers were met with was a project in the direst of states.

While the previous provider had appropriately set up the development environment, testing environment and production servers, not only did we have no access to an important portion of the system, but the project’s documentation was nowhere to be found and any technical consultation with the previous contractor was a matter that was out of the question. Essentially, it was like walking into a brand new home and being locked out of the master bedroom.

Thankfully, however, we did have a definite goal in mind as we stumbled around this “property” and examined the local layout. We also keenly understood that in order to achieve our objectives, we would have to break into a Kubernetes cluster.

The Uncovered Truth About Amazon EKS Clusters

Yes, ladies and gentlemen, a break-in was about to occur, but we promise it’s not nearly as bad as it seems. You see, in an attempt to protect their own data, our customers had deleted the profiles of their previous providers and had inadvertently locked themselves out of a cluster in the process.

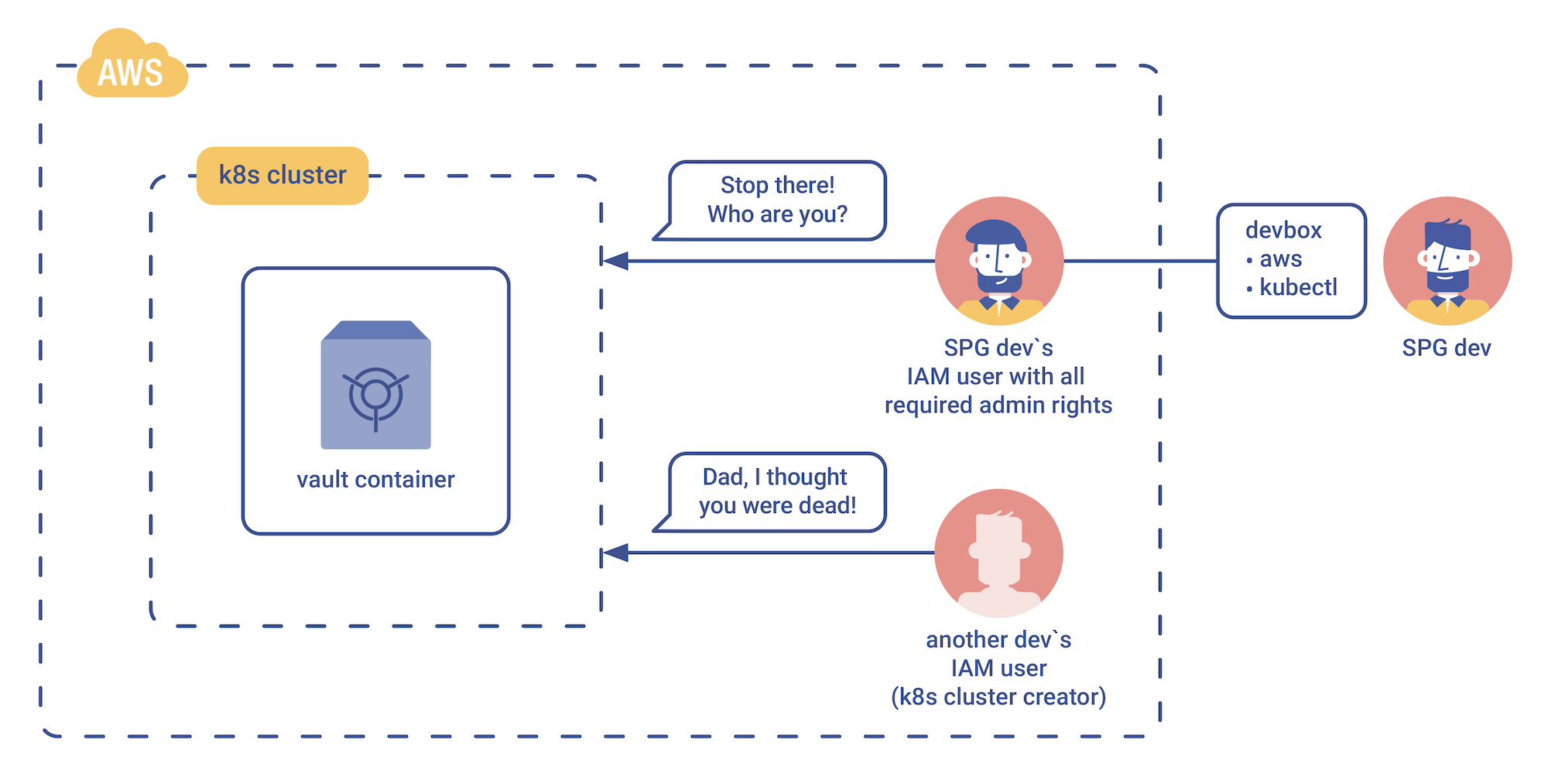

The overlooked detail was the following: when Kubernetes clusters are created on Amazon EKS, unless their privileges are transferred over to another user, then only the cluster creator is able to access the created cluster. It’s all thoroughly documented here, but the important bit is stated below:

“When an Amazon EKS cluster is created, the IAM entity (user or role) that creates the cluster is added to the Kubernetes RBAC authorization table as the administrator (with system:masters permissions). Initially, only that IAM user can make calls to the Kubernetes API server.”

And this is where our troubles began. Though we did have an AWS IAM user of our own — with full admin rights and privileges — Kubernetes wouldn’t let us in because it didn’t recognise our account’s permissions.

How to Regain Control Over Your K8s Cluster

Thankfully, we then recalled how Kubernetes’ RBAC and IAM work together and wagered that if the name of our IAM user could match the name of the original cluster creator, then we would likely be given the go-ahead by Kubernetes. And just like that, our task became to figure out what that elusive cluster creator had been called. But this was also easier said than done, as the information was kept beyond our grasp, within Kubernetes itself.

In fact, to complicate matters even further, the previous development team was rather large, so even if we somehow did gain access to the entire history of IAM users, we would still have to comb through all of them individually.

After observing the system’s infrastructure a little more, we noticed that it was built with Terraform — an open-source IaC tool — and as it turns out, Terraform’s native *.tf files contain details of how Kubernetes clusters are created.

It was as if all of our developers prayers had been answered at once, as this in turn led us to the cluster’s RBAC settings, where we could find the details of all creators to whom any access was granted at any point in time.

From there, all we had to do was create a new IAM user with the same username as the cluster creator and turn the key to our brand new bedroom which was opened without a hitch.

The Moral of the Story

As our customers had to learn the hard way, if you are worried about data security, do your homework before attempting to tamper with your infrastructure. Your cluster creators matter, so as a matter of first priority, make sure that their IAM accounts remain entirely under your control. At the very least, however, do your best to not delete them by accident until you are certain that all of their privileges have been transferred over to your new dev team.

Proper use of Infrastructure as Code is always a good idea, but it’s even better to map out your infrastructure’s layout and do a bit of planning ahead. At SPG, we can help you to implement IaC at the very beginning of a project or whenever you find it lacking in your product.

Get Help With Your Infrastructure

If you have any other questions about Amazon EKS or Kubernetes clusters, be sure to get in contact with our development team!

Request a Consultation