When a new feature lands in the hands of testers, there is always a moment of excitement. Many testers enjoy exploratory testing – the freedom of clicking through the interface, trying different combinations, and discovering surprises. This energy is valuable, but there is a catch. If the tester does not fully understand the system or the requirements behind it, their testing can become shallow. Instead of following the real-life paths users will take, they test only what appears on the screen in front of them.

On the other end of the spectrum lie detailed, step-by-step test cases. These are supposed to bring order, yet they often create their own problems. When every button click is prescribed, testers risk becoming robots. The intent behind the test disappears in the forest of detail. The tests take a long time to prepare, are hard to maintain, and make planning cumbersome. In a future piece we will look at how to act in line with human instincts rather than against them – because in testing, instinct matters.

At a Glance

The Problem with Traditional Test Cases in SaaS

In the fast-moving world of web SaaS, rigid test scripts quickly turn into a liability. Defining exactly “how” to test each scenario assumes users will follow the same narrow path – an assumption that rarely holds true. Real customers behave unpredictably, and most production defects appear off-script in edge conditions that detailed cases simply do not capture. Step-by-step test cases can therefore create a dangerous illusion of full coverage, while leaving critical flows untested.

Maintenance is another drain. In SaaS, UIs and features evolve continuously. Each change means rewriting dozens of test steps, inflating documentation into something bloated and unmanageable. One tester noted that a simple checklist of twenty high-level checks replaced dozens of redundant test cases, avoiding “huge inflation of documentation”. Detailed cases also fall victim to the “pesticide paradox”: the more often they are repeated, the fewer new issues they reveal. The result is wasted effort and a false sense of security.

And perhaps most importantly, prescriptive test cases restrict the tester’s role. Instead of thinking critically and probing for weaknesses, testers are reduced to script followers. This mechanical work drains engagement and ultimately reduces the number of meaningful defects uncovered.

Test Scenarios as a Lean Alternative

We wanted a format that kept discipline but left room for creativity. Something quick to write, flexible enough for the tester, but also structured enough for forecasting workload and tracking coverage. The result we came to rely on was test scenarios.

At the highest level we work with epics. If you stripped away everything else and only kept the epics, you would still see the shape of the system we are building. Each epic is then fleshed out with user stories, which carry acceptance criteria such as “a successful login takes the user to the dashboard” or “an incorrect password shows an error message”.

Test scenarios extend these criteria. They capture the tester’s view of risks and edge cases: “try XSS injection”, “check email validation patterns”, “verify localisation”. Instead of 20 rigid steps, you have one focused scenario that encourages exploration while still pointing to what matters.

The benefits of this approach are significant:

- Flexibility and exploratory freedom – scenarios describe what to test, not how. They adapt easily as the product changes and encourage testers to probe more deeply.

- Efficiency and lower maintenance – scenarios are quick to write and easy to maintain, even when the UI changes. Time saved on documentation can be spent on actual testing.

- Broader coverage and user focus – scenarios mirror real user flows and business goals rather than tiny technical steps. They help QA prioritise customer value and risk.

- Team alignment and planning – because scenarios are written in plain language, they are easier for developers, business analysts, and even clients to understand. They also provide a manageable unit for estimating workloads.

- Agile friendly – lightweight, high-level scenarios align perfectly with Agile ways of working. They reinforce the focus on outcomes and customer value, not just box-ticking.

Test Session Planning with Scenarios

Planning a test session starts with identifying the functions that need attention: retesting old functionality, covering new features, and validating closed defects. Some acceptance criteria already have scenarios, while others need to be extended. Sometimes defects slip through existing scenarios, reminding us to strengthen QA by adding new ones.

Increasingly, tools like ChatGPT can help draft scenarios from acceptance criteria or user stories. This reduces the time testers spend preparing, and frees them to concentrate on exploring the system.

Before a session begins, the tester estimates the workload and uses scenarios as their roadmap. Instead of a rigid script, they have a structured plan that still leaves room for judgement. After the session, the output is a detailed log of what was tested: features covered, edge cases attempted, and methods applied. This record captures both the breadth of coverage and the depth of exploration – something traditional cases rarely achieve.

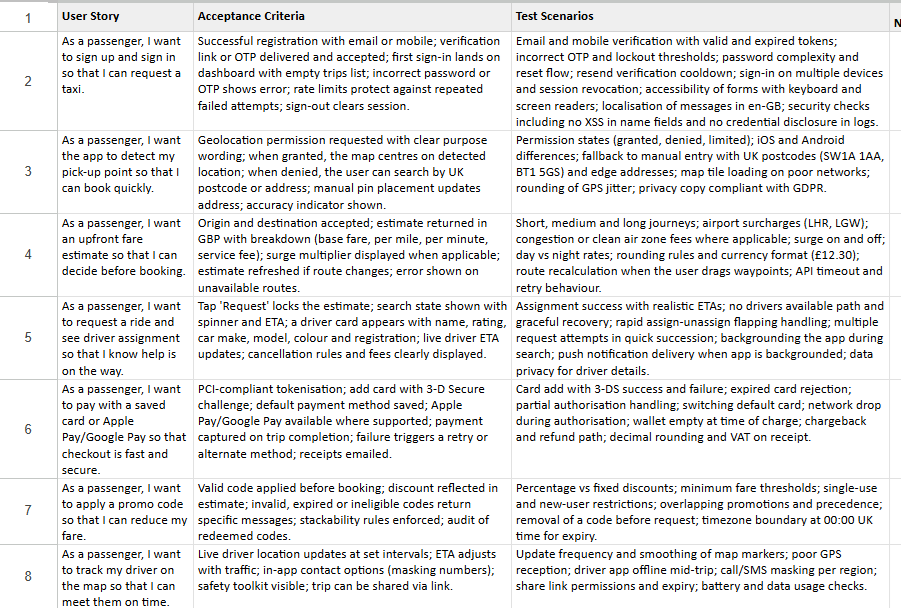

Picture 1. Example of test session plan written in google worksheet

Real-World Lessons

Across the industry, many teams have successfully abandoned heavy test cases in favour of scenarios. A Kanban team reported dropping test cases entirely, as user stories became small enough that scenarios were obvious. Others replaced dozens of documents with a one-page specification per feature, saving days of overhead while maintaining clarity.

Testers themselves report feeling more engaged, asking more “what if” questions, and uncovering more critical defects once freed from scripts. Teams also find that scenarios make onboarding easier and improve communication, since everyone – from developers to business analysts – can understand them.

Even managers who once worried about traceability have found that scenario-based metrics (coverage of user journeys, number of scenarios executed per sprint) are both leaner and more meaningful than counting thousands of test cases. One QA manager noted their test suite shrank by 30 per cent while actual coverage increased.

In the end, the point of QA is not to produce artefacts, it is to produce confidence. Confidence that the things customers care about work today, will still work after tomorrow’s release, and will keep working as the product grows. When we judge testing by the weight of its paperwork, we get formality without insight. When we judge it by how well it protects value and reduces risk, we get effectiveness.

Scenario-led testing is the pragmatic middle path. It formalises what must be predictable – scope, intent, coverage, traceability – and deliberately leaves the how open enough for human judgement. Scenarios tie back to epics and user stories, so planning and reporting stay transparent. They scale with change because they describe outcomes, not keystrokes. They give testers room to think, probe, and discover what scripted steps will miss, while still letting managers forecast effort and measure progress in meaningful units.

Modern tools now make this discipline lighter, not heavier. We can draft scenarios from acceptance criteria in minutes, maintain compact catalogues of risks and patterns, generate realistic data, and automate the repetitive parts of regression in CI. The hours we used to spend writing and re-writing step lists are returned to where they matter most – thoughtful exploration, sharper conversations with developers and product owners, and faster feedback loops.

This is also a cultural choice. Treat testers as investigators, not operators. Keep documentation lean and comprehensible so new team members can orient quickly. Align language with the business so decisions are easier to make. Replace a false sense of safety from thousands of steps with clear evidence that the right journeys were exercised, the risky paths were challenged, and the results are known.

If there is a single takeaway, it is this: choose effectiveness over formality. Use scenarios to keep testing predictable where it must be and adaptable where it should be. Pair lightweight documentation with smart automation and experienced human judgement. That is how SaaS teams move quickly without breaking trust – less drag, more insight, and quality that keeps pace with change.

About the Author

Oleksii Smirnov is the CEO of Software Planet Group. With more than 20 years of experience in the technical management of software projects, he has guided teams in delivering complex solutions for clients across a wide range of industries.